How Modernizing ETL Processes Helps You Uncover Business Intelligence

We live in a world of information: there’s a more significant amount of it than any time in recent years, in an endlessly extending cluster of structures and areas. Managing Data is your ultimate option using which Data Teams are handling the difficulties of this new world to help their organizations and their client’s flourish.

As of late, we’ve seen information has gotten endlessly more accessible to organizations. This is because of the increasing information storage systems, decline in cost for information stockpiling, and present-day ETL processes that make putting away and getting to information more receptive than any other time. This has permitted organizations to grow in every aspect of their business. Being data-driven has gotten universal and essential to endurance in the current environment. This article will talk about how modernizing ETL processes today helps organizations uncover multiple benefits of Business Intelligence in day-to-day life.

First of all, we should understand what exactly is an ETL Process?

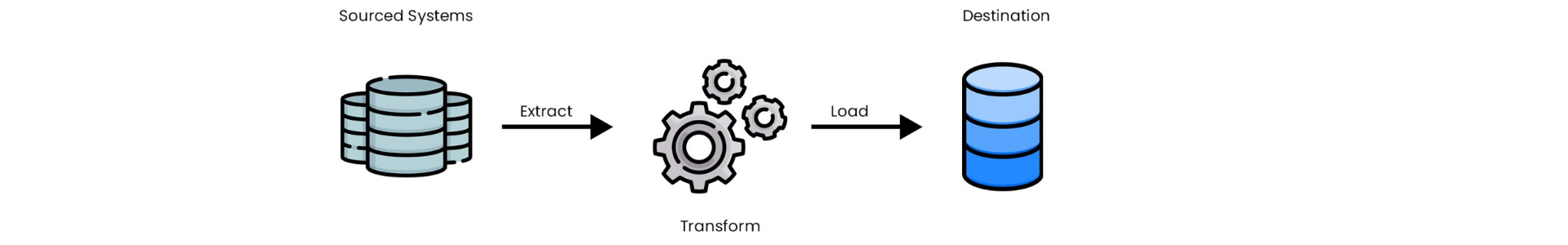

ETL represents Extract, Transform, and Load. It is the backbone of present-day data-driven organizations and is often measured on Extraction, Transformation, and Loading parameters.

- Extraction: Raw information is extracted or obtained from different sources (like a data set, application, or API).

- Transformation: The obtained basic info is modified, cleaned (made free from errors), and synchronized with the goal so that it becomes simpler for the end client to pursue.

- Loading: Once the information is modified according to the client’s needs, it is stacked into an objective framework, which essentially is a Business Intelligence (BI) device or a data set.

Understanding ETL Process: Foundation of information-driven organizations

Each organization needs every group inside their business to make more brilliant, information-driven choices. Client care groups look at patterns to raise tickets or do thorough examinations to discuss areas to give better onboarding and documentation. Presentation groups need better perceivability into their advertisement execution across various stages and the ROI on their spending. Item and designing groups dive into usefulness measurements or bug reports to assist them with bettering their assets.

The ETL Processes enable various groups to get the data they need to comprehend and play out their positions better. Organizations ingest information from a comprehensive exhibit of sources through the ETL cycle, representing Extract, Transform, Load. The pre-arranged information is then accessible for investigation and use by the different groups who need it, just as for cutting edge examination, installing into applications, and use for other information adaptation projects. Anything you desire to do with the information, you need to pass it through the ETL first.

This entire ETL process is undeniably challenging to complete. It regularly requires full-time information architects to create and keep up with the contents that keep the information streaming. This is because the information suppliers frequently make changes to their constructions or APIs, which then, at that point, break the contents that power the ETL cycle. Each time there’s a change, the information engineers scramble to refresh their contents to oblige them, bringing about personal time. With organizations currently expecting to ingest information from divergent information sources, keeping up with ETL scripts for everyone isn’t adaptable.

Modernizing ETL Processes makes a living better

The cutting-edge ETL Processes follows a somewhat unique request of activities, named ELT. This new cycle emerged because of the acquaintance of apparatuses with updated ETL interaction, just as the ascent of present-day information stockrooms with moderately low stockpiling costs.

Today, ETL apparatuses do the challenging work for you. They have mixed for a large number of the significant SaaS applications and have groups of designers who keep up with those combinations, easing the heat off of your in-house information group. These ETL instruments are worked to interface with the most significant information stockrooms, permitting organizations to connect their applications toward one side and their distribution centre on the other while the ETL devices wrap up.

Clients can generally control arrangement through a straightforward drop-down menu inside the applications, mitigating the need to stand up your workers or EC2 box or building DAGs to run on stages like Airflow. ETL instruments can likewise typically offer more powerful alternatives for adding new information steadily or just refreshing new and adjusted lines, which can consider more regular burdens, and nearer to continuous data for the business. With this improved-on measure for making information accessible for investigation, information groups can find new applications for data to produce an incentive for the company.

The ETL Processes and information distribution centres

Information distribution centres are the present and fate of information and investigation. Capacity costs on information distribution centres have diminished lately, which permits organizations to stack whatever crude information sources could be expected under the circumstances without similar concerns they may have had previously.

Today, information groups can ingest crude information before changing it, permitting them to modify the distribution centre rather than a different organizing region. With the expanded accessibility of information and atypical language to get to that information, SQL permits the business greater adaptability in utilizing their information to settle on the right choices.

Modernized ETL processes deliver better and quicker outcomes.

Under the traditional ETL process, as information and handling necessities developed, the possibility that on-premise information stockrooms would fall flat also evolved over time. When this occurred, IT wanted to fix the issue, which generally implied adding more equipment.

The cutting-edge ETL process in the present information distribution centres avoids this issue by offloading the system resource management to the cloud information warehouse. Many cloud information distribution centres offer figure scaling that takes into account dynamic scaling when necessities spike. This permits information groups, too, in any case, to see adaptable execution while holding expanded quantities of computationally costly information models and ingesting all the more enormous information sources. The diminished expense in register power alongside process scaling in cloud information warehouse permits information groups to productively increase assets or down to suit their requirements and better guarantee no personal time. Basically, rather than having your in-house information or potentially IT group worrying over your information storage and figuring issues, you can offload that practically totally to the information distribution centre supplier.

Information groups would then be able to fabricate tests on top of their cloud information stockroom to screen their information hotspots for quality, newness, and so on, giving them faster, more proactive perceivability into any issues with their information pipelines.

Check out our video on – How to use iWay2 Talend Converter for your integration purposes?

Tuesday, 13 May 9:00 am

Tuesday, 13 May 9:00 am Friday, 25 Apr 6:30 pm

Friday, 25 Apr 6:30 pm Wednesday, 31 Jul 9:30 am

Wednesday, 31 Jul 9:30 am