Introduction

This article covers how Talend Real-time Big Data can be used to effectively leverage Talend’s Real-time Data processing and Machine Learning capabilities. The use case handled in this article is how Twitter data can be processed in real time, and classify if the person tweeting has post-traumatic stress disorder (PTSD). This solution can work for any major health situation of a person, for example cancer, which is discussed at the end.

What is PTSD?

PTSD is a mental disorder that can develop after a person is exposed to a traumatic event, such as sexual assault, warfare, traffic collisions, or other threats on a person’s life.

Statistics about PTSD

- 70% of adults in the U.S. have experienced some traumatic event at least once in their lives, and up to 20% of these people go on to develop PTSD.

- An estimated 8% of Americans, 24.4 million people, have PTSD at any given time.

- An estimated one out of every nine women develop PTSD, making them about twice as likely as men.

- Almost 50% of all outpatient mental health patients have PTSD.

- Among people who are victims of a severe traumatic experience, 60 – 80% will develop PTSD.

Source: Taking a look at PTSD statistics

Insights into the solution

Considering the high increase in the end-users of the social networks, we expect a humongous amount of data written every day into social networks. To handle such a huge amount of data, we need a Hadoop Ecosystem. Hence, this use case of PTSD is classified as a Big Data use case, as Twitter is our data source.

Spark Framework

Apache Spark™ is a fast and general engine for large-scale data processing. |

Random Forest Model

Random forest is an ensemble learning method for classification, regression, and other tasks, that operates by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes (classification) or mean prediction (regression) of the individual trees. |

Hadoop Cluster (Cloudera)

A Hadoop cluster is a special type of computational cluster designed specifically for storing and analyzing huge amounts of unstructured data in a distributed computing environment. |

Hashing TF

As a text-processing algorithm, Hashing TF converts input data into fixed-length feature vectors to reflect the importance of a term (a word or a sequence of words) by calculating the frequency that these words in the input data appear. |

Talend Studio for Real Time Big Data

Talend Studio to perform MapReduce, Spark, Big Data real-time Jobs. |

Inverse Document Frequency

As a text-processing algorithm, Inverse Document Frequency (IDF) is often used to process the output of the Hashing TF computation in order to downplay the importance of the terms that appear in too many documents. |

Kafka Service

Apache Kafka is an open-source stream processing platform written in Scala and Java to provide a unified, high-throughput, low-latency platform for handling a real-time data feed. |

Regex Tokenizer

Regex tokenizer performs advanced tokeni |

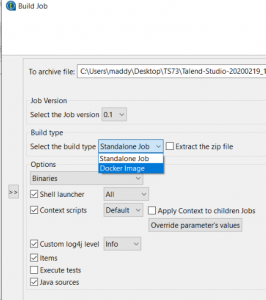

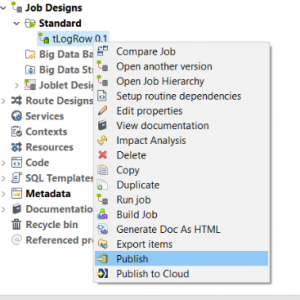

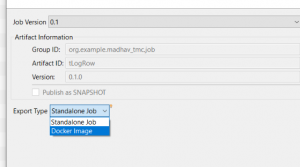

Step 1: Retrieve data from Twitter using Talend

Talend Studio not only supports Talend’s own components, it also supports the custom-built components from any third parties. All these custom-built components can be accessed from Talend Exchange, an online component store.

- Taking advantage of a custom Twitter component, we can get data from Twitter by accessing both REST and Stream APIs.

- To take advantage of the Hadoop ecosystem and for Big Data, we implemented a real time Kafka service to read data from Twitter.

- Talend Studio for Real-time Big Data has Kafka components that we can leverage to read the data that is being read by the Kafka service, and pass it on to the next stages of the design in real time.

To perform all of the above, we need to get access to the Twitter API.

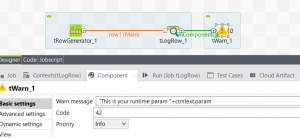

Snapshots of Talend Job designs

Deciding which hashtags to use plays a vital role. We may use a single hashtag, or a combination of multiple hashtags to pull the accurate data required. Choosing appropriate hashtags helps to filter the large volume of source data.

Step 2: Create and train the model using Talend

As we all know, nothing can be done without human intervention. Once the data pulled from Twitter is in place, we need to manually classify the tweets as Having PTSD or Not Having PTSD.

Classification can be done by adding a new attribute to that data. Values can be Yes or No (Yes – having PTSD, No – Not having PTSD). Once the classification is done, we can call this data as a training set that can be used to create and train the model.

To achieve our use case, before creating the model, training data needs to undergo some transformations such as:

- Hashing TF

- Regex Tokenizer

- Inverse Document Frequency

- Vector Conversion

After passing through all the algorithms above, training data can be passed into the model to create and train it. The model that suits this prediction use case best is the Random Forest Model.

Talend Studio for Real-time Big Data has some very good machine learning components that can perform regression, classification & prediction using Spark Framework. Leveraging the capability of Talend to handle machine learning tasks, the Random Forest Model has created and trained the model with the training data. Now we have the model ready to predict the tweets.

Note: All the work is done on a Cloudera Hadoop Cluster, Talend is connected to the cluster, and the rest of the computation is achieved by Talend.

Snapshot of a Talend Spark Job design

Step 3: Prediction of tweets using Talend

Now we have the model ready on our Hadoop cluster. We can use the process in step 1 and pull the data from Twitter again, which acts as a test data. The test data has only one attribute: Tweet.

When the test data is passed to the model we have created, the model adds a new attribute Label to the test data, and its value will be Yes or No (Yes – having PTSD, No – Not having PTSD). The predicted value depends solely on the way the model is trained in step 2. Again, all this prediction can be done in Talend Studio for Real- time using Spark framework.

Snapshot of a Talend Spark Job design for prediction

Evolution of the model

Once the model predicts the classification of the test data set, we find the records to be 25% erroneous (on average). We need to assign the right classification to that 25% of the records, add them to the training set, and retrain the model. It should predict accurately now. Add more records to the training set, and repeat the same procedure until the model becomes accurate. A model needs to evolve over time, by training it with newly added training data that comes with time. Some management is required.

Note: To boost the effectiveness of the model, we can add synonyms of the training data to the training set and retrain the model, which leads to developing the model synthetically rather than just organically.

A threshold of 90% accurate predictions is a must to classify the model as accurate. If the prediction accuracy level drops below 90%, then it is time to retrain the model.

Real-time applications from this use case

Note: Once the classification of data is done (Yes or No), it may lead to many more useful real-time applications.

Broader Scope

The use case solution designed can work for any of the major health situations. For example, if the use case is with cancer, using cancer-specific hashtags we can train the model in an equivalent way and start predicting if the person has cancer or not. The same real-time applications as discussed above can be achieved.

Authors: Madhav Nalla, Saikrishna Ala, and Kashyap Shah

This Article also published on Talend Community Blog:

Source: https://community.talend.com/s/article/Unleashing-Talend-Machine-Learning-Capabilities